报告内容:

Deep learning has been pervasively applied in various domains and gained tremendous success. However, its adoption in the area of cyber security is still limited, due to the lack of interpretability. In this talk, I will present our work, LEMNA, a high-fidelity explanation method dedicated to the application of deep learning in reverse engineering. The core idea of LEMNA is to approximate a local area of the complex deep learning decision boundary using an interpretable model, which essentially captures the set of key features to classify an input sample. The local interpretable model is specially designed to accommodate two requirements of explaining deep learning in reverse engineering: (1) handling feature dependency (2) handling nonlinear local boundaries. In this talk, I will also demonstrate the use of LEMNA to help machine learning developers validate model behavior, troubleshoot classification errors, and automatically patch the errors of the target models.

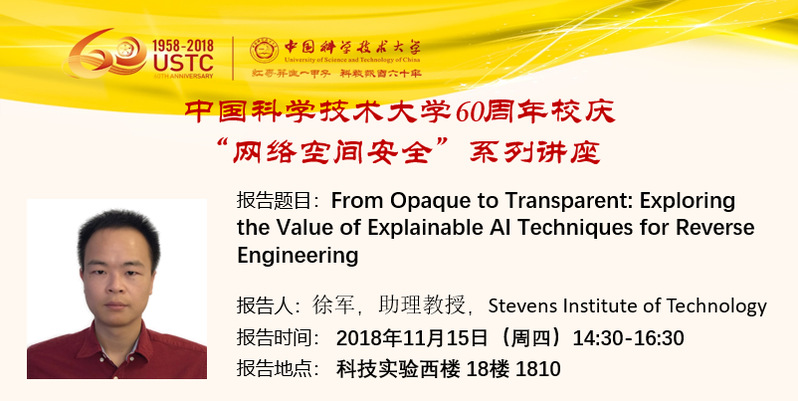

报告人简介:

Jun Xu is an Assistant Professor in the Computer Science Department at Stevens Institute of Technology. He received his PhD degree from Pennsylvania State University and his bachelor degree from USTC. His research mainly lies in the areas of software security and system security. He has published many papers at top-tier cyber security conferences, such as ACM CCS and USENIX Security. His recent work on explaining deep learning in security applications won the outstanding paper award at ACM CCS 2018. He is a recipient of Penn State Alumni Dissertation Award, RSA Security Scholar Award and USTC Guo-moruo Scholarship.

欢迎感兴趣的师生参加!